If You Have a Fairly Powerful PC and Yet Experience Lag When Trying to Watch a YouTube Video in 8K, Despite Having Sufficient Internet Bandwidth, I Will Explain Why This Happens.

At the end of 2019, I purchased the desktop I currently have, which is still a fairly powerful PC: it has a Seasonic 620W power supply, an AMD Ryzen 5 3600X processor with liquid cooling from DeepCool, 16GB of HyperX Predator Black DDR4 RAM @3200MHz, a GIGABYTE GeForce RTX 2070 SUPER Windforce OC 3X 8GB GDDR6 256-bit graphics card, a Samsung 970 EVO Plus 250GB PCI Express x4 M.2 2280 SSD for the operating system, a Kingston A400 480GB SSD for files, and a DELL monitor at 144Hz.

I haven’t used and still don’t use this computer very often because most of the things I do here, I’m doing them on the laptop I purchased last year, the Vivobook 15 OLED (M513, AMD Ryzen 5000 series). On the desktop, I don’t have much time to play games or do video editing or other similar things. Most of the time, it remains turned off, collecting dust, or when I do turn it on, I use it to listen to music on the Logitech Z-5500 audio system, connected to the external sound card Cambridge Audio DAC Magic 100, or I connect it to the living room TV through HDMI on special occasions or when I have guests.

When I purchased this computer, I was able to watch 8K videos on YouTube without any problems, with minimal CPU usage and very little GPU usage. Even though I don’t have an 8K monitor, the rule is that when you want to watch media content, you should do it at its native resolution because that’s how videos look best, and anyway, YouTube compresses them heavily to save disk space and internet bandwidth.

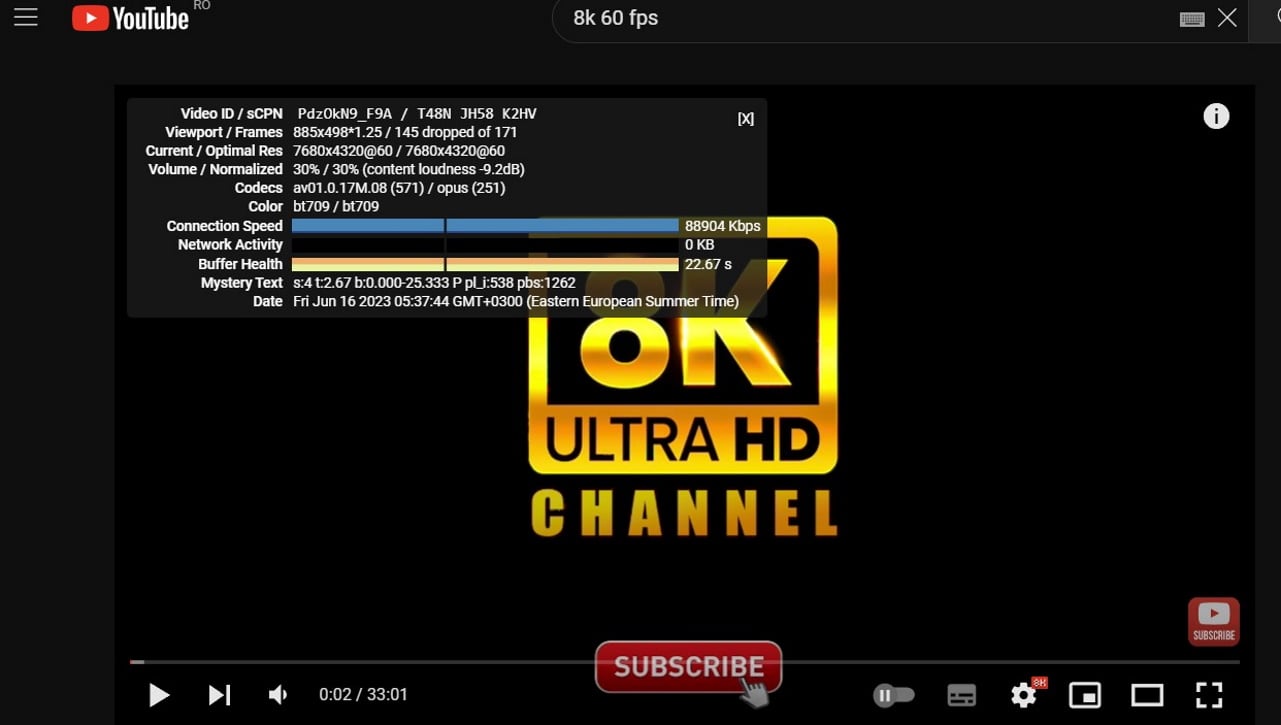

This year, when I tried to watch an 8K video on YouTube, the CPU spiked to 100%, and the video started lagging. I immediately thought that something must have happened because in the past, watching 8K videos on YouTube was not a problem. So, I right-clicked on the YouTube player and chose the “Stats for nerds” option. There, I noticed that for 8K, YouTube started using a different codec than for 4K, and in 4K, I had no problem playing YouTube videos. At this moment, YouTube is using the VP9 codec for 4K, which my graphics card (RTX 2070 SUPER) can hardware decode, and for 8K, YouTube started using the AV1 codec, which my graphics card cannot hardware decode. It requires a graphics card from at least the next generation, the 3XXX series, which includes hardware decoding for AV1.

Clearly, it’s not the processor’s job to decode 8K video, but rather the graphics card, which is not only much more powerful than the CPU but also specifically designed for this task. What I don’t understand is why YouTube, or rather Google somehow, forces you to upgrade your graphics card, even if your current one is still powerful enough to handle all modern games or programs.

Maybe there are differences between VP9 and AV1, or at least that’s what they say, but personally, I don’t notice any difference in quality between VP9 codec on 8K and AV1 on 8K, and I consider myself a detail-oriented person. It’s not the first time YouTube/Google does things like this: in the past, when the transition was made from Flash Player to HTML5 player, the HTML5 player was not yet well optimized, and at that time, it consumed a lot more processor power, and therefore electrical energy, compared to Flash Player.

The same thing is happening now: instead of setting some rules that users with graphics cards from the RTX 2XXX series and below should use VP9, and those with graphics cards from RTX 3XXX series and above should use AV1, YouTube thought it would be good to put their foot down and force everyone to use the AV1 codec on 8K, which consumes processor power and electrical energy. Such decisions astonish me, and I don’t understand them.

I am all for technological progress, but it needs to be done with care, not through forced measures.

Google has a monopoly when it comes to the Google Chrome browser and YouTube, so they do whatever they want. Instead of implementing what I wrote above, as they have complete control over the software from source to destination, they decided to introduce something that most people using a desktop or laptop cannot use.

And that’s how they force you to upgrade components, even if it’s not necessary. I am disgusted by such practices, and I do not approve of them at all!

What is your opinion when such things happen, and you can no longer watch videos on YouTube when “yesterday” you could do it without any problems?